ffmpeg -i YouTubeGimpLogoShortTutorial.mkv -vn -acodec copy audio.wav

ffmpeg -i YouTubeGimpLogoShortTutorial.mkv -i audio_fixed.wav -c:v copy -map 0:v:0 -map 1:a:0 YouTubeGimpLogoShortTutorial_FixedAudio.mkv

# emerge -av dev-python/pip

$ pip3 --user install ffmpeg-normalize

$ ~/.local/bin/ffmpeg-normalize *.mkv

$ ls normalize

ssh pi@192.168.1.38 sudo dd bs=4M if=/dev/mmcblk0 | gzip -c > raspberry_dd_4M.img.gz

gunzip raspberry_dd_4M.img.gz

fdisk -l

Disk raspberry_dd_4M.img: 3.7 GiB, 3947888640 bytes, 7710720 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x738a4d67

Device Boot Start End Sectors Size Id Type

raspberry_dd_4M.img1 8192 532479 524288 256M c W95 FAT32 (LBA)

raspberry_dd_4M.img2 532480 7710719 7178240 3.4G 83 Linux

mkdir mntloop1;mount -o ro,loop,offset=$((512*8192)) raspberry_dd_4M.img mntloop1

mkdir mntloop2;mount -o ro,loop,offset=$((512*532480)) raspberry_dd_4M.img mntloop2

mount: mntloop2: overlapping loop device exists for raspberry_dd_4M.img.

umount mntloop1

mkdir mntloop2;mount -o ro,loop,offset=$((512*532480)) raspberry_dd_4M.img mntloop2

mount: mntloop2: cannot mount /dev/loop0 read-only.

mkdir mntloop2;mount -o loop,offset=$((512*532480)) raspberry_dd_4M.img mntloop2

e2fsck -C0 -p -f -v /dev/sdd2

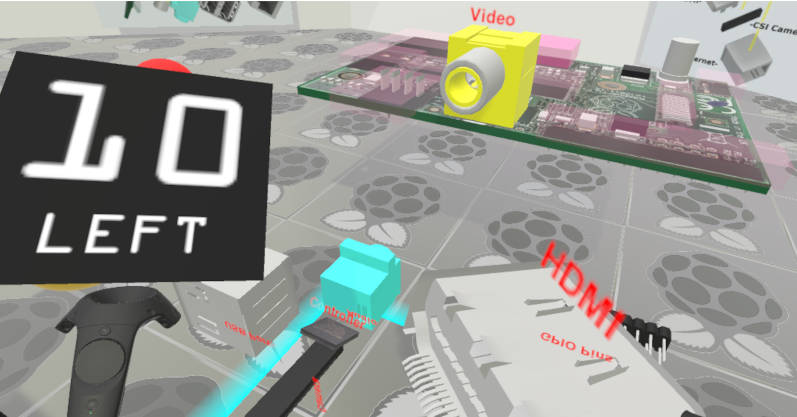

dd bs=4M if=/mnt/sdb1/RaspberryPi/Images/2020-02-13-raspbian-buster-lite.img of=/dev/sdd conv=fsync

echo "picam2" >> /mnt/sdd2/etc/hostname

touch /mnt/sdd1/ssh

sudo apt install python3-picamera

sudo apt install python3-pip

pip3 install dropbox

pip3 install RPi.GPIO

sudo pip3 install dropbox

sudo pip3 install RPi.GPIO

email

root

flog archives

Disclaimer:

This page is by me for me, if you are not me then please be aware of the following

I am not responsible for anything that works or does not work including files and pages made available at www.jumpstation.co.uk

I am also not responsible for any information(or what you or others do with it) available at www.jumpstation.co.uk

In fact I'm not responsible for anything ever, so there!